Introduction

There are numerous techniques to increase your website’s SEO. Optimizing the WordPress Robots.txt is one of the best methods. Since it permits search engines to scan your website, the Robots.txt file is well-known as a potent SEO tool. As a result, you can freely improve your site’s SEO by tweaking the Robots.txt file. We will clearly introduce some approaches to optimize the WordPress Robots.txt on this topic in this blog post today.

The definition of the Robots.txt file

Below is a summary of WordPress’s Robots.txt file.

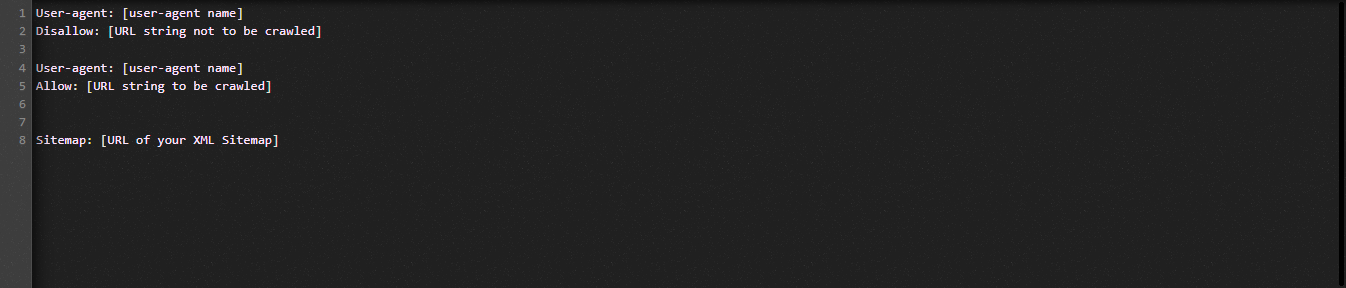

Let’s now have a look at the Robot.txt file’s fundamental structure:

Let us define the terms used in the Robots.txt file for you:

- User-agent: The crawler’s name

- Disallow: Prevents a website’s pages, folders, or files from being crawled.

- Allow: enabling directory, file, and page crawling

- Sitemap, if provided: Show the sitemap’s location: represent a number or letter.

- *: Stand for number or character

- $: You can use it to restrict web pages that include a specific extension by standing till the end of the line.

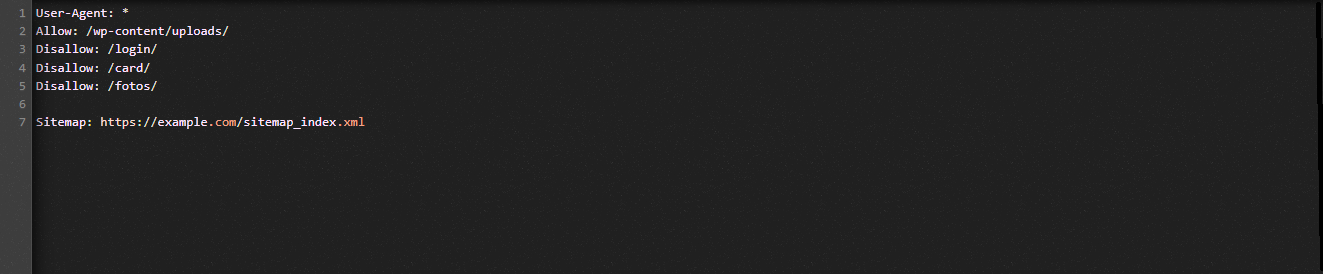

Let’s look at the following illustration to assist you to comprehend:

We permit search engines to crawl the files in the WordPress uploads folder in the Robot.txt example.

However, we restrict search engines’ access to the login, card, and photo folders.

Finally, we provide it with the sitemap’s URL.

Why should a Robots.txt file be created for your WordPress website?

Without a Robots.txt file, search engines will still crawl and index every page of a website. Nevertheless, they will crawl all of the material on your website rather than just the folders or pages you specify.

You should create a Robots.txt file for your website for two key reasons. First of all, you may effortlessly manage all the information on your website with its assistance. Whether pages or directories should be crawled or indexed is up to you. It means that pages like plugin files, admin pages, or theme directories won’t be crawled by search engines. As a result, it will help your website’s index loading speed.

The requirement to prevent unneeded posts or pages from being indexed by search engines is the other justification. You may therefore easily and conveniently prevent a WordPress page or post from showing up in search results.

How to optimize the WordPress Robots.txt more efficient

In this instructional blog, we’ll show you how to create and improve the Robots.txt file on your WordPress website using two different methods:

- Making changes to the Robots.txt file using a plugin: All in One SEO

- Manually editing the Robots.txt file with FTP

To make the Robots.txt file more effective, use the All in One SEO plugin.

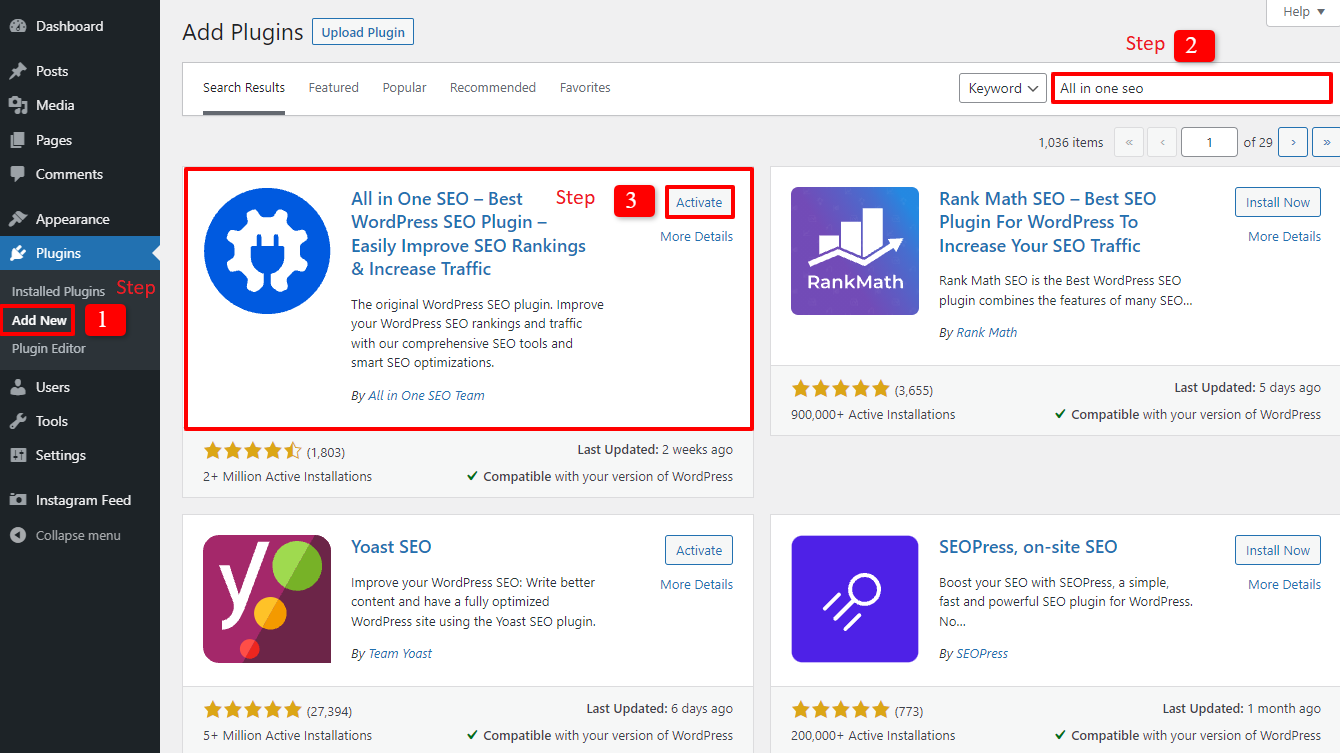

Several WordPress SEO Plugins are available to help you with this problem in the WordPress market. But in this article, we’ve chosen to employ All in One SEO, a well-liked plugin with over 2 million users, to help you.

Let’s install it first by selecting Plugins -> Add New. then look for the plugin’s name, install it, and then activate it.

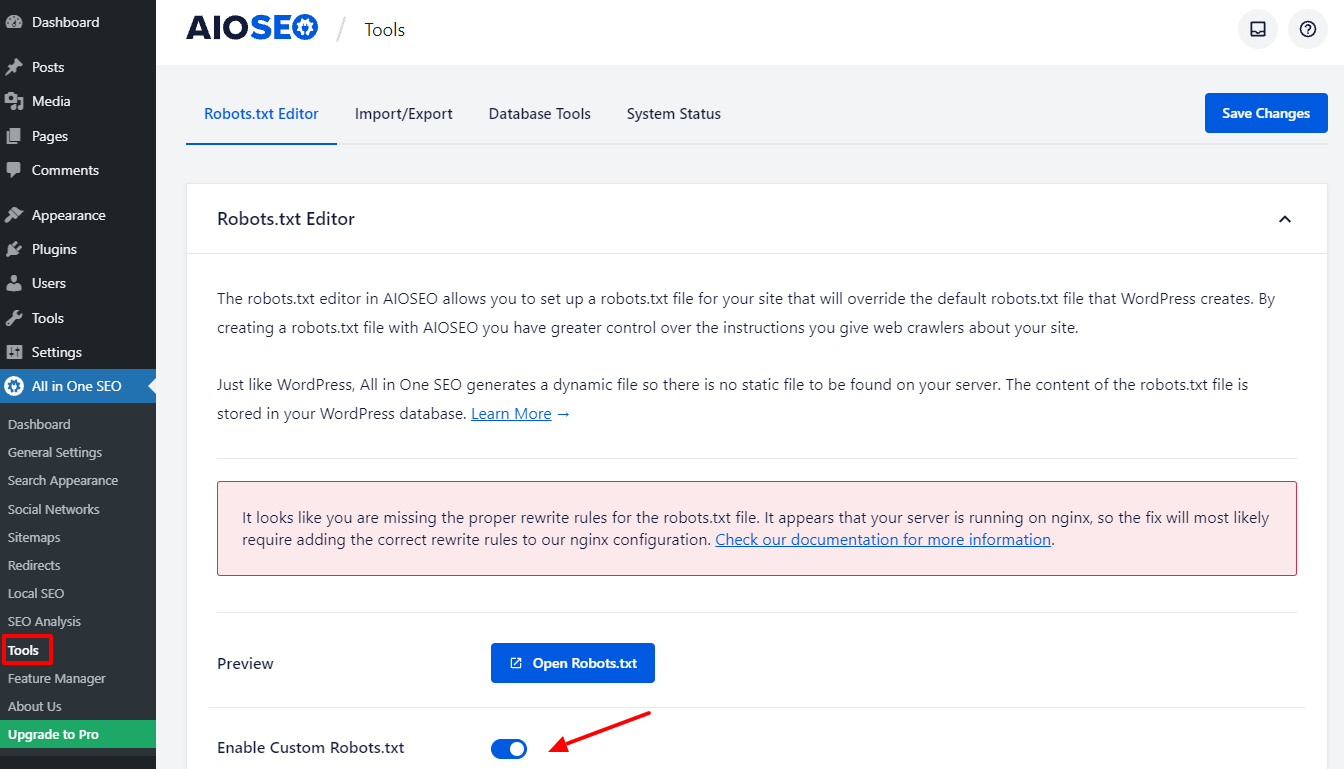

You will return to the admin dashboard after completing a few easy steps. Go to All in one SEO -> Tools right now.

Let’s toggle the Enable Custome Robots.txt button in the Robots.txt Editor section to make it active. After turning it on, you can therefore edit the default Robots.txt file in WordPress.

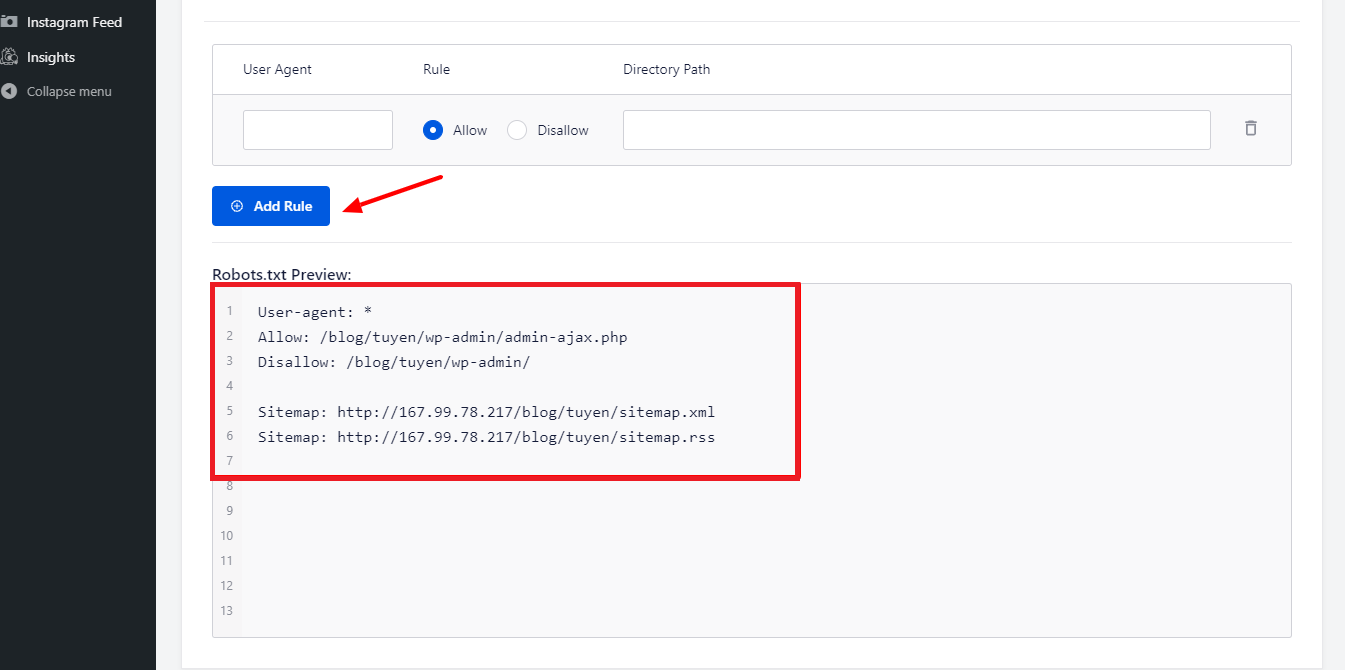

When we scroll down, you will notice that this plugin displays a box with a preview of the Robots.txt file. These are WordPress’s default rules.

By selecting the Add Rule button, you can add more of your own specific rules to help your website’s SEO.

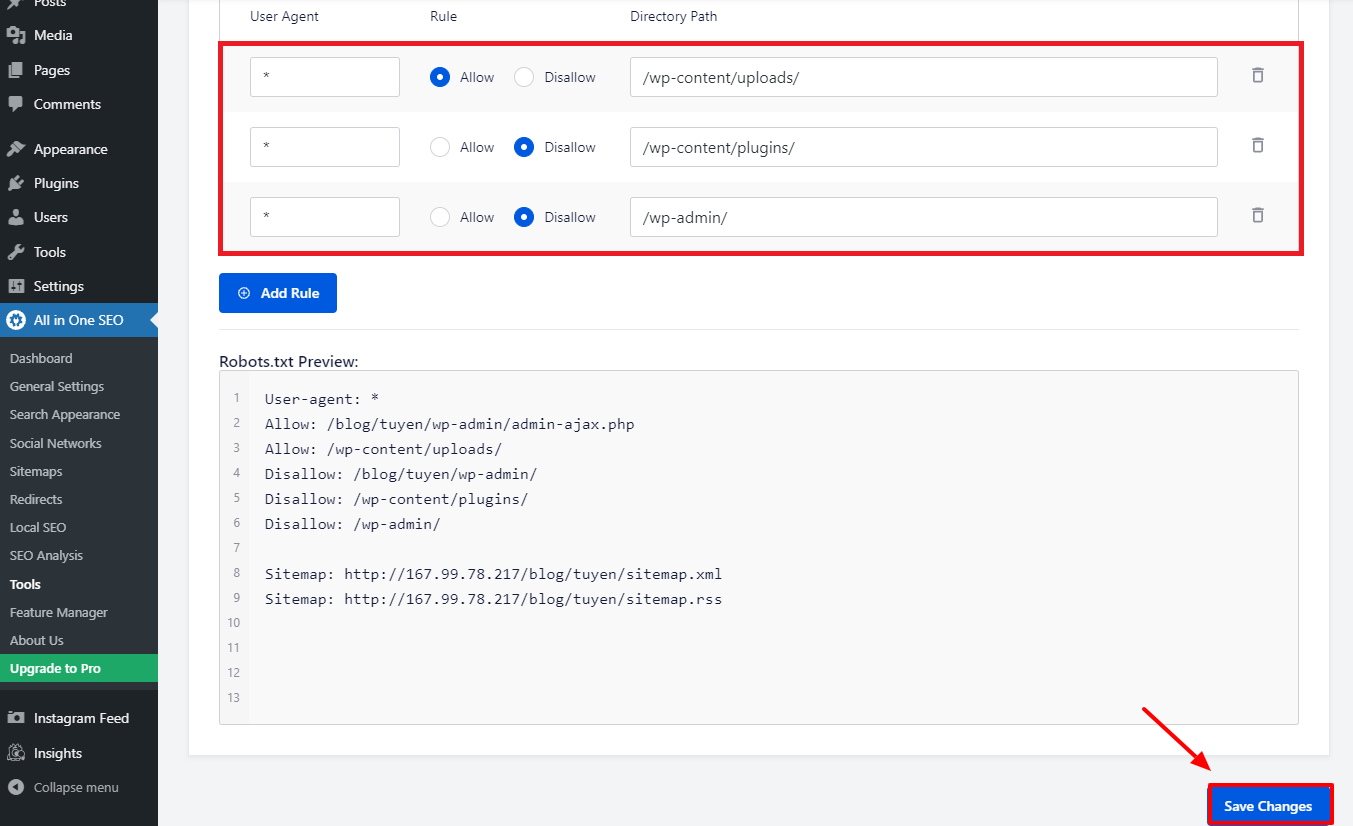

Let’s enter a * in the User-Agent field to apply the rule to all user agents in order to add a new rule.

You only need to select Allow or Disallow for the search bots to crawl in the following stage.

Don’t forget to enter the filename or directory path in the Directory Path field after that.

When you’re done with all of your preferences, let’s press the Save Changes button.

All of the additional rules you’ve already added will now be immediately applied to the Robots.txt file.

Manually modify and optimize the WordPress Robots.txt using FTP

This is a different approach to WordPress Robots.txt optimization that you might enjoy. You must edit the Robots.txt file using an FTP client in order to achieve this.

To utilize the FPT client conveniently, let’s first establish a connection to your WordPress hosting account. You can easily notice the Robots.txt file in your site’s root folder when you access it. Let’s pick View/Edit by clicking with the right mouse button.

On the other hand, you probably don’t have anything if you can’t find it. So let’s first create a Robots.txt file for your website by selecting Create a new file with the right mouse button.

The Robots.txt file is a plain text file, so you can download it and edit it using a plain text editor like Notepad or TextEdit, among others. You simply need to upload it back to the root folder of your website after that. Seems easy, don’t you think?

Make sure your WordPress site’s Robots.txt file is working.

Your Robots.txt file has to be tested once you’ve already prepared and configured it so that any issues can be identified and reported. As a result, you must test it using a Robots.txt tester tool. Try Google Search Console, as we highly recommend it.

You must ensure that your website is linked to Google Search Console if you wish to use this service. You may then use the Robots Testing Tool by selecting the property from the selection list. The Robots.txt file for your website will now be added automatically. It will alert you if there is a mistake.

Conclusion

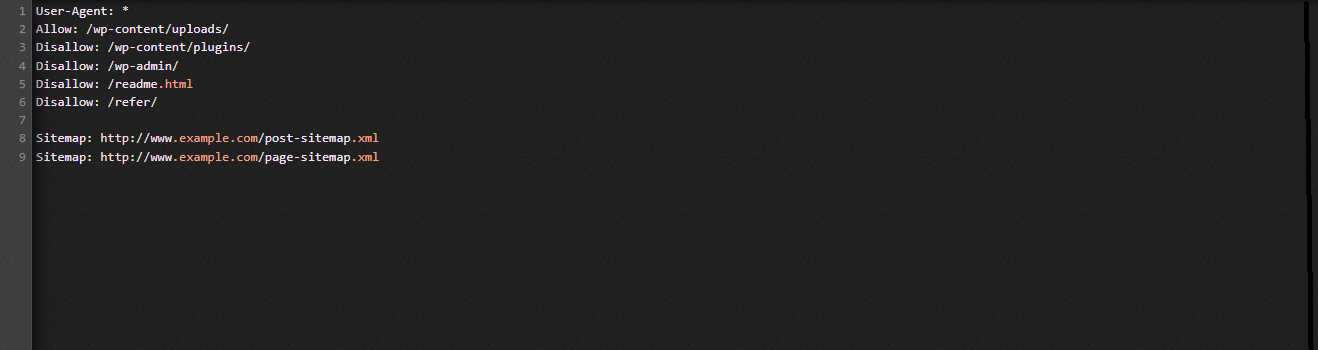

Overall, the major goal of optimizing the Robots.txt file is to stop search bots from accessing pages that are not required for the general public, including plugin folders or admin folders. As a result, blocking WordPress tags, categories, or archive pages won’t help your SEO. In other words, to create a perfect Robots.txt file for your WordPress site, you should adhere to the pattern below.

We hope you will find the blog post today to be helpful to optimize the WordPress Robots.txt. Remember to pass it forward to your acquaintances and other webmasters. Also, please leave a remark below if you have any questions. Thus, we will respond to you as soon as we can. It’s time for you to optimize the WordPress Robots.txt. Until the future blogs, take care.

By the way, we have a ton of contemporary, appealing, and free WordPress themes that fully support this useful feature. Let’s go see them and ask one of them to redesign your website